Don’t rip out the battery, that’s free UPS!

Don’t rip out the battery, that’s free UPS!

If there’s nothing utterly specific from the nextcloud ecosystem that you absolutely need, no, Synology has you covered

You seem to be obsessed with optimising one resource at the expense of others.

If you want to push it and paint me as obsessed about something, then let it be this: providing this community with on-topic and reasonable advice

you’re only going to save a few MB of RAM.

This is false, and you should read once again my previous message illustrating why: on a decent “self-host”-friendly machine, the same software may work very well, or not at all, depending on whether the user would engage with very basic configuration. This goes beyond RAM (memory isn’t the sole shared resource), and I’m adamant that the alternative (which was “pretending that the problem doesn’t exist” turned into “throwing money at the problem”) is unreasonable.

On the other hand, if you’re doing it to learn more about computers then it might be worthwhile. This is a community of hobbiests, after all…

Or more importantly: the extent to which you can self-host out of sheer luck and ignorance like you suggest is very limited. If you don’t want to engage with a minimum amount of configuration, you might bump into security issues (a much broader and complex subject) long before any of the above has a material impact.

I’m saying this based on real world experience

And do you think I would spend my time engaging if that wasn’t from my own very “real world experience” of lessons learned the hard way?

Bringing-up “diminishing returns” as if this was an optimisation game also doesn’t do this justice. Take the typical “household FOSS package” with software names often brought up in here: a nextcloud instance, a photo-sharing service like immich, private instant messaging, a software forge, a subsonic-compatible audio/video streaming server, a couple php websites like wallabag and RSS aggregators.

An Intel Atom CPU and 4GB of RAM is plenty sufficient for all that, and will cost you single digit USD a month, granted you put the (one-time) effort to tune and balance those services. Would you run all the above from upstream’s docker files, I can guarantee you that you would deem this (perfectly fine otherwise) server underpowered for the task at hand (and would probably go for a 10th gen or so Intel Core CPU, quadruple the RAM and 3-6× the energy cost in the process).

And that’s the point I’m making here: a self-hosting community of tinkerers should (ideally) know better, for the ethics’ sake of keeping the process environmentally friendly, and not wasting other people’s money.

Do you have the data to back that up?

I mean, you are the one making the exceptional claim that unnecessarily running multiple instances of programs on a device with finite resources has no practical adverse effect. Of course, the effects can be more or less drastic depending on the many variables at play (hardware, software, memory pressure, thread starvation, cache misses, …) and can indeed be negligible in some lucky circumstances. The point is that you don’t call that shot, and especially not by burying your head in the sand and pretending it’s never gonna be a problem.

Effective use of computing resources requires tuning. Introduction of a new service creates imbalance. Ensuring that the server performs nominally and predictably for all intended services is a balancing act and a sysadmin’s job. Services whose deployment settings are set by someone with no prior knowledge of the deployment constraints can’t be trusted to do a good job at it (that’s the nature of the physical world we live in, not my opinion), and promoting this attitude promote the kind of wasteful and irresponsible computing I was on about.

Now, I’ll give you the link to this basic helper for tuning a PostgreSQL server: https://pgtune.leopard.in.ua/

Will you tell me what are the correct inputs for my homelab (I won’t tell you the hardware, the set-up, the other services running on it, the state of the system, etc)?

And later, when you will distribute your successful container to millions of users, what will you respond to the angry ones that will complain that your software is slow, to no fault of your coding, because they happen to pile up multiple DBs, web servers, application servers, reverse proxies, … on their banana SoCs?

I disagree. You are just entertaining the idea that servers must always and forever be oversized, that’s the definition of wasteful (and environmentally irresponsible). Unless you are firing-up and throwing-away services constantly, nothing justifies this and sparing the relatively low effort it is to deploy your infrastructure knowingly.

Precisely what pre-devops sysadmins were saying when containers were becoming trendy. You are just pushing the complexity elsewhere, and creating novel classes of problems for yourself (keeping your BoM in control and minimal is one of many others that got thrown away)

Self hosting doesn’t mean “being wasteful and letting containers duplicate services”. I want to know which DB application X is using, so I pool it for applications Y and Z.

they dont have a functioning prototype

Seems incompatible with their claim that they will ship this year

Why would you think so? Can you give examples of specific tools that wouldn’t be available to mail clients? On the other hand, there are many things available on most email clients which are missing on GitHub, like tagging automation from custom and flexible rules, Turing-complete filtering, instant searching, saved searches, managing the lifecycle of issues, linking with the VCS etc. all in context and in one place.

How people generally go about re-implementing those on GitHub is with bots, and you are left at the mercy of what the bot can do/its admin wants you to do, and each project is its own silo and possibly breaks your workflow.

I’m fine with GitHub because these days I’m mostly a casual contributor, but there’s a lost appreciation for the sheer power and universality of email-based workflows. That the largest projects (including the Linux kernel) run on that should speak for itself.

I figured they’d be juggling a lot of mails

Yeah, but organized into as many threads as there are issues/PRs, so it’s exactly as daunting as the same list as viewed on GitHub/project/issues (because it is exactly the same content).

and I guess it is possible for some people to stay on top of that

It’s the crux of being a maintainer, it’s your job “to stay on top of that”, with, on larger projects, ad-hoc tooling and automation being the only way. Email is infinitely more flexible than the one-size-fits-all take by GitHub on that.

In which way do you expect this to strengthen copyright laws? Also, from the article, it reads like Anthropic implicitly admits to copyright infringement, and that their defence essentially boils down to “if you prosecute us, we will go bankrupt”. I don’t see how that flies, but then again, IANAL :-)

It’s pretty simple: if Antropic wins, that’s the end of the US copyright law, replaced by the diktat of the tech bros (worse for artists, and for anyone else but the tech oligarchs). If Antropic loses, nothing changes and we get to fight the (comparatively tiny) copyright mafia for another day.

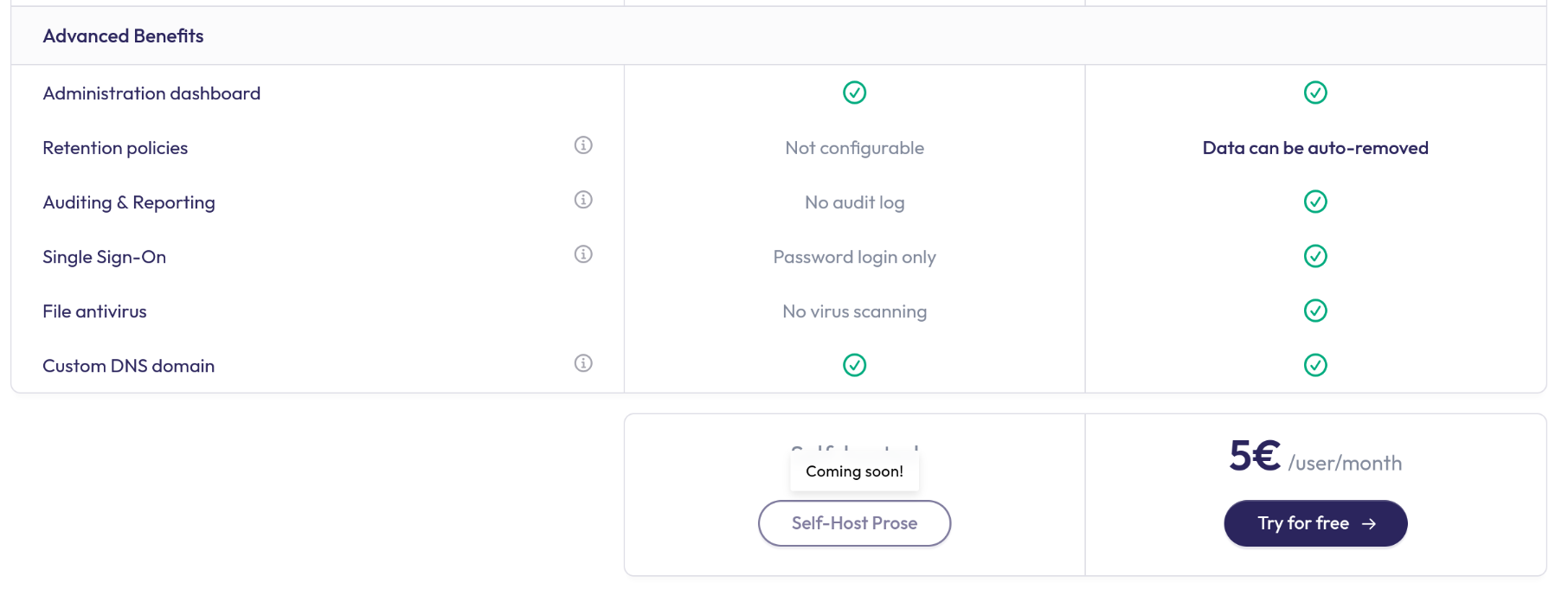

I don’t see what you’re describing 🤷 Soon only appears once on the page and not in this context for me.

It appears as a tooltip

Anyhow, where I intended to draw your intention was on https://prose.org/downloads

You can just download the client for your platform (assuming one is available), or use the web one (otherwise), or just build one from the sources I linked (which is what I do), and login with your usual XMPP account. Would you need an account and have to decide which provider to register with, this would come handy: https://providers.xmpp.net/

In this set-up, prose.org isn’t hosting your account and will of course let you interact with thousands of users or more, like any other XMPP client.

Those silicon valley tech billionaires are businessmen who, by the looks of it, have completely fallen for their own marketing, and secluded themselves in a weird echo chamber packed with sycophants and profiteers. They are not superior beings. They have no credential nor academic status enabling them to speak as authorities worth being listened to. Anyone with a critical mind and access to scientific literature understands better than them the actual challenges behind “uploading one’s brain to the cloud” and can debunk that science fictionesque bullshit.

All there is to this is a bunch of aging megalomaniacs with too much power, except over death, and that scares the crap out of them and makes them say some stupid shit. And I hate that we sanewash this just because they are rich and influential. As a society we should kick them back to where they belong, which is a court of law, for their continued effort in dismantling our society.

Just below you’ll find a section about “self hosting (soon)”, though you can already use it with your own XMPP account as a standalone client (no questions asked), like I do, or, optionally, with the server-side components (opensource prosody module).

Edit: adding https://github.com/prose-im

See my other comment: if you already have an XMPP account, prose is just another client that you can use however you like, for free (and at that point, everyone should be having an XMPP account, if you ask me). If you don’t have an account, they can act as service provider (but this being a decentralized network, the don’t want to encourage hosting everyone on the same server).

It is not spam, and you miss-read it. Prose is an open-source XMPP client. They can set you up (host on your behalf) for free, up to a certain point. You can pay for it (there is a commercial offering), or you can use it unlimited and with no extra costs than your own server’s if you self-host. It’s all being developed there in the open in case you don’t want to take my word for it: https://github.com/prose-im

In terms of tech and implementation details, it’s been years since everyone has been converging towards the same WebRTC architecture (with everyone bundling/linking the same set of basic components and libs as found in chrome, android, …). As such, a call between two participants (or as a group with less than a dozen participants) should be as good on XMPP as anywhere else (including the commercial options like Google Meet, Zoom, Matrix, …).

Of course there are caveats like relying on TURN where direct connection is impossible, but that’s the gist of it. Regarding XMPP group calls,

Where things start getting spicier is in large group calls (dozens of participants or more) requiring the stream to be brokered by a central server (SFU), with stream re-compression and optimisation. Standard-XMPP isn’t great for that yet (non-standard XMPP, like Jitsi, on which it is based, is pretty damn good, but unavailable from your regular XMPP setup). Work is going on to improve that (on two fronts, with some XMPP servers turning into SFUs, and with a protocol being designed for offloading AV streams to any willing existing SFU).

The problem with large group calls essentially boils down to how much bandwidth and CPU you want to throw at it, and that’s not cheap (unless, of course, you are the product, i.e. Google Meet, Discord & al). The same applies to self-hosted Matrix/Galene/Jitsi: you probably won’t want to hold a large conference call on a home-server, and the server admins are bearing some costs, so get to know them and how sustainable that is. In the case of Matrix.org, it is not.

No idea what prose is.

Prose is an open-source XMPP client with a focus on large rooms/banquet-style conversations (like IRC, slack, …). It is still in its early stages but already quite usable and possibly a good fit for a subset of Skype refugees.

I mean, a mechanical timer costs, like, 3 bucks in any currency and lets you set charge and discharge cycles. Add 10 bucks and you have one that you can pilot via REST API.