Virtualization isn’t required for docker on Linux generally, unless a container tries to use KVM or something like that. Also docker already exists in Ubuntu’s repos under the docker.io package so that’s the easiest place to download (apt install docker.io) from.

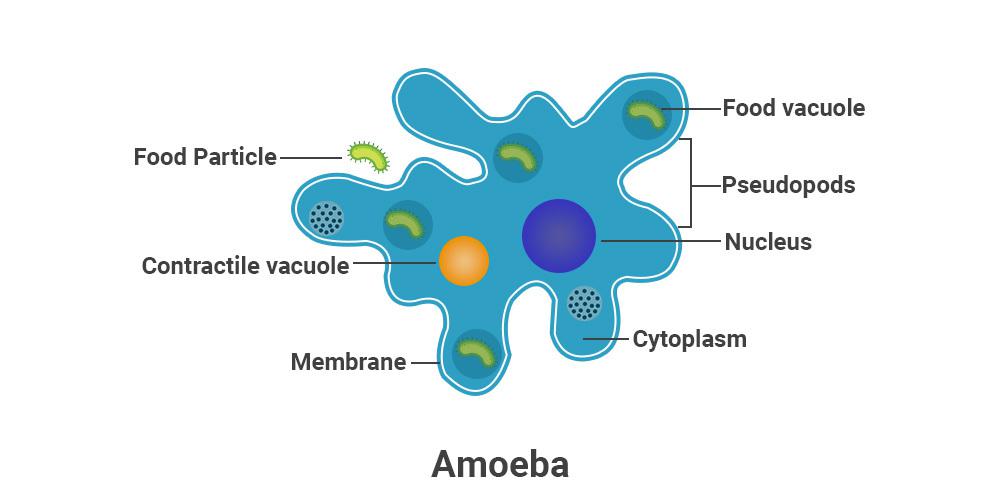

Avid Amoeba

- 15 Posts

- 538 Comments

3·5 days ago

3·5 days agoAlready done. I’m just trying to exhaust all the hypotheses I have in case I stumble upon a durable workaround that is applicable for others and cheaper. Good USB add-in cards are not cheap.

3·5 days ago

3·5 days agoI’ve been trying. Nothing has worked so far. I’ve got a few more cables/permutations to try.

2·5 days ago

2·5 days agoYou’re right, the correct term is Gb/s or Gbps. Edited.

7·5 days ago

7·5 days agoThe thing is that I’m already at the last couple of leaves in the investigation tree and I’m not willing to change anything upwards of the USB driver level. That’s why there isn’t much point in getting people to spin their wheels for solutions I can’t or won’t apply. If I was completely unable to get the data corruption and disconnects under control, I’d trash the system and replace it with Intel. Fortunately, a PCIe add-in USB controller seems to work well so I avoided the most costly solution. At this point I don’t actually need to get the motherboard ports to work well but I’m curious to follow down the signalling rabbit hole because I’m not the only one who’s having this problem and the problem doesn’t affect just this one use case. If I find a solution like an in-line 5Gb USB hub (reduces data rate), or just using USB-C ports instead of USB-A (reduces noise), or using this kind of cable instead of that kind, I could throw that as a cheaper workaround in this ZFS thread and elsewhere. The PCIe cards work but aren’t cheap.

7·5 days ago

7·5 days agoUnfortunately it won’t because the transfers are happening between ZFS and the hardware storing the data so I can’t control the data rate at the application level (there are many different applications) or even at the ZFS level. This is why in this particular case I’m stuck with a potential hardware-related workaround. I mean I could do something stupid like configuring a suboptimal recordsize in ZFS but I’d prefer to get the hardware to stop losing bits and hoping ZFS would catch that. Decreasing data rates is a generally acceptable strategy to deal with signalling issues, if the decreased rate is usable for the application at hand. In my case it is.

12·5 days ago

12·5 days agoI am trying to transfer data via USB at high speed without data corruption, silent resets and occasional device disconnects. Those are things that happen because the USB controllers on my motherboard made by AMD with some help from ASMedia do not function correctly at the speed they advertise. So given the problem the right solution is to get a firmware or hardware fix for these USB controllers, however that’s unlikely to happen. So I’m trying to find a workaround. I already have one (PCIe add-in card) but now I’m also testing running the bad controllers at half-speed which seems successful so far but I was wondering if there’s a way to do it in software. I’m currently bottlenecking the links by using 5Gb hubs between the controllers and the devices.

2·5 days ago

2·5 days agoYup. A USB host controller. Specifically AMD Bixby.

10·5 days ago

10·5 days agoGreat question. In short, garbagy AMD USB controllers. I recently switched to a newer AMD board and have been hit with the same issues faced by these poor sods. I’ve been conducting testing over the last week, different combinations of ports, cables, loads, add-in PCIe USB controllers. The add-in cards seem to behave well, which is one way the folks from that thread solved their problems. The other being changing to Intel-based systems. Yesterday however I was watching an intro about USB redrivers by TI and they were discussing various signalling issues that could occur and how redrivers help. That led me to form the hypothesis that what I’m experiencing might be signalling related. E.g. that the combination of controllers/ports/cables simply can’t handle 10Gbps. That might be noise from some of those devices or surrounding ones that causes signal loss when operating at 10Gbps, speeds this setup can actually achieve. In order to test that I tried placing the DAS boxes behind a 5Gb hub plugged in a port that has previously shown a failure. So far it’s stable. This is why I was wondering whether there’s some magic in the kernel that could allow configuring 10Gb ports to operate at 5Gb.

2·5 days ago

2·5 days agoThe extension cable is a great idea. I’m currently trying 5Gb hubs on the path. Seems to work.

E: I think the USB-A connector for 5Gb and 10Gb is the same. The 10Gb cable must simply carry double the rate without losing data due to noise. Similar to Cat 5 vs Cat 6 ethernet cables. If so an extension should keep the controller-advertised speed downstream. Seems like hubs are the only option.

511·5 days ago

511·5 days agoOh yes, X would be AMD fixing their defective USB controllers but that won’t happen on a system produced years ago. 😂

1·5 days ago

1·5 days agoTry to use the standard virtual desktop functionality. It’s not going to behave the exact same way but you might find a workable adaptation.

You can pass through USB to VMs.

You can use Premiere in a VM. Depending on what you want to do and how fast your CPU is, you might be able to use it without any special config. If you need to pass through a GPU, you can buy the config isn’t trivial. Definitely doable though.

Generally you’d want to use KVM (with virt-manager) for virtualization but it doesn’t support any 3D acceleration in Windows yet. The result is that the Windows UI in a VM is not “smooth.” It’s usable but not smooth. If you need acceleration you’d have to do GPU passthrough. There’s some ongoing work to get basic acceleration without passthrough but it isn’t done yet. Both VirtualBox and VMware have basic 3D acceleration for Windows VMs. They have other pros and cons but if you find that one of them works better than KVM for your use case, go ahead and use that. We use both VirtualBox and VMware for different purposes at work. I know of people who use all sorts of engineering CAD software in VMware.

You already trust these people today. They run/own many large corporations today which dramatically affect our lives in multitude of ways. Except today we can’t get remove them from these positions of power under the current system.

It’s thanks to this in part that your aunt keeps indulging her imaginary pain when she thinks about your lifestyle.

2·9 days ago

2·9 days agogroovy.gif

15·9 days ago

15·9 days agoDevOps is often glorified Bash programming.

111·9 days ago

111·9 days agoI think for games, people need newer kernels and drivers to support the newer hardware needed to play newer games, and they’re willing to put up with the bugs that come along with thay. Ubuntu and Debian (stable) aren’t strong at that by definition. I always use an older GPU that supported well by the Ubuntu LTS I run. If it doesn’t play something, I’ll wait till a new driver lands in that LTS or the next.

953·10 days ago

953·10 days agoGood person! This is how you learn Linux and gain experience. Trying to understand why something happened and trying to fix it using that understanding. Not “just reinstall” or worse “you should use X distro instead.”

1·11 days ago

1·11 days agoYou could try finding changed config files by running:

sudo debsums -acNote that this won’t catch all. There are files that packages install and don’t touch afterwards. I my case for example it does catch that

/etc/gdm3/custom.confwas modified to enable autologin among other things.

I’d advise against using docker from docker.com’s repo on Ubuntu unless you need to. Ubuntu LTS includes a fairly recent docker package starting with 22.04. By using that you eliminate the chance for breakage due to a defective or incompatible docker update. You also get the security support for it that comes with Ubuntu. The package is

docker.io.